ISTC 2025 Implementation Competition

The ISTC 2025 Error-Correcting Code Contest brought together teams from around the world to tackle one of the field’s classic challenges: designing and decoding codes that balance error-rate performance with computational efficiency.

The competition’s goal was to provide a hands-on, comparative platform for innovation and discussion — showcasing how theory and implementation meet in practice.Participants were evaluated on error-rate vs. SNR performance, decoding complexity, and runtime stability across multiple (K, N) operating points.

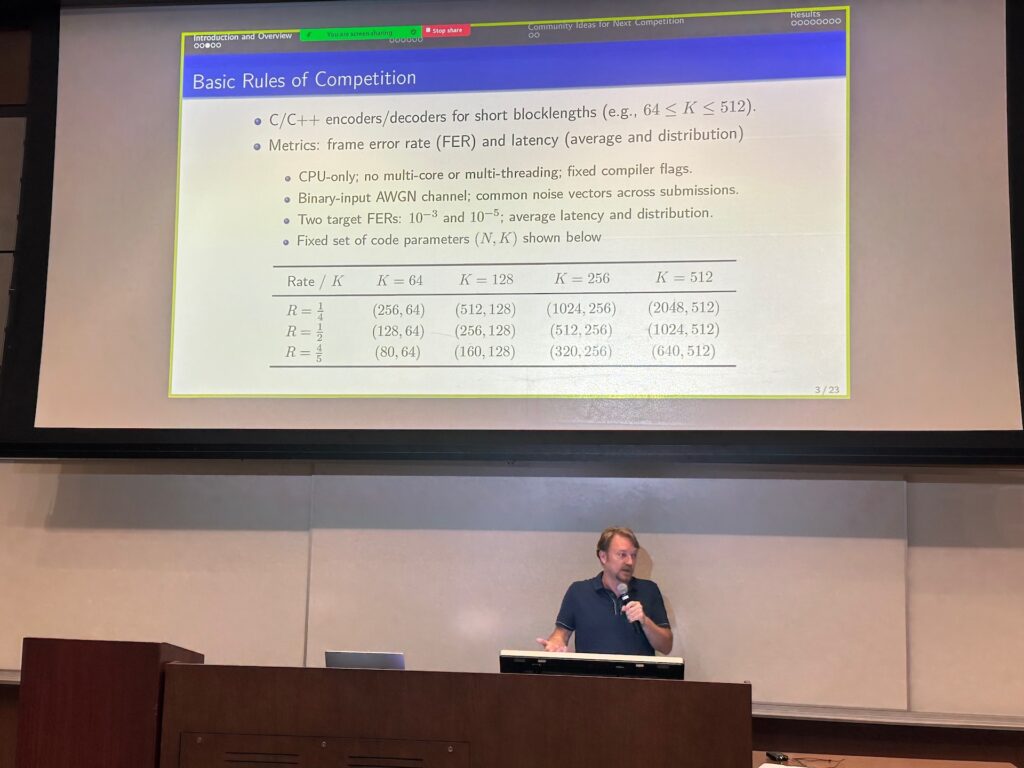

To see full implementation requirements click here.

Awards

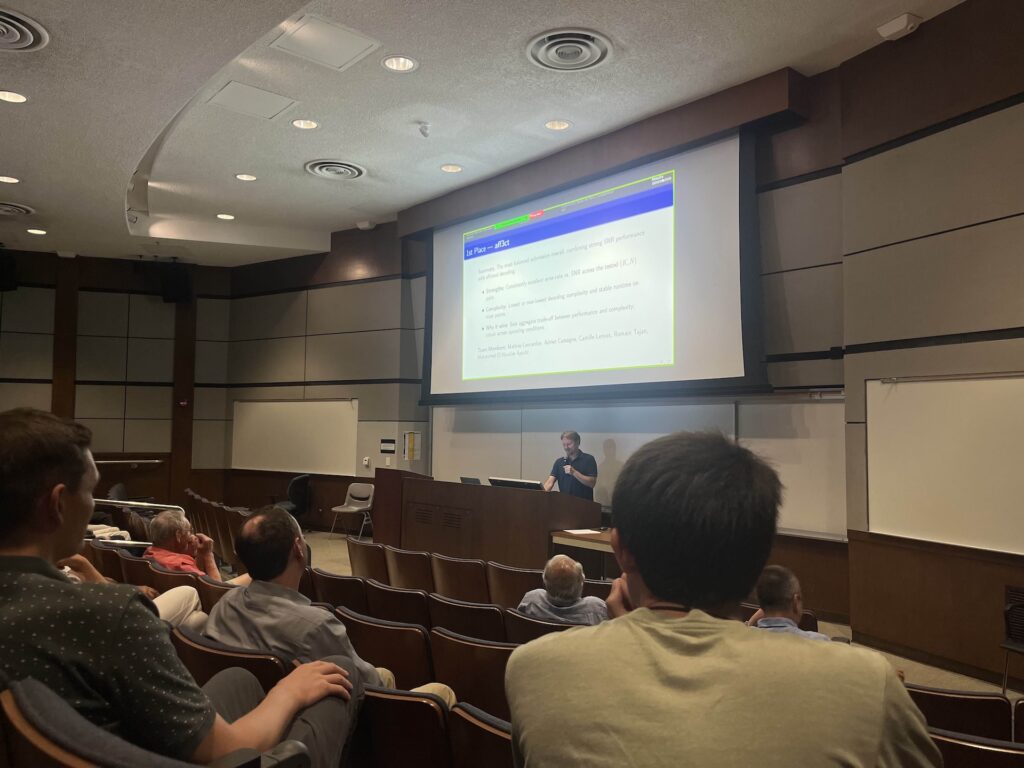

First Place – AFF3CT (pronounced affect)

Team Members: Mathieu Léonardon (IMT Atlantique) , Adrien Cassagne (Sorbonne Université), Camille Leroux (Bordeaux INP), Romain Tajan (Bordeaux INP), Mohammed El Houcine Ayoubi (IMT Atlantique).

Second Place – SJTU (Shanghai Jiao Tong University)

Team Members: Bosheng Chen, Li Shen, Binghui Shi

Third Place – UCLA (University of California, Los Angeles)

Team Members: Richard Wesel, Wenhui (Beryl) Sui, Zihan (Bruce) Qu

| Metrics Compared /Team | AFF3CT | SJTU | UCLA |

| Strengths | Consistently excellent error-rate performance across tested (K, N) pairs. | Sometimes exceeded the winner’s SNR performance for specific (K, N) and SNR ranges. | Very good error-rate vs. SNR performance across multiple (K, N) settings. |

| Complexity | Lowest or near-lowest decoding complexity and stable runtime on most points. | Typically higher decoding complexity and latency, reducing overall throughput. | Decoding complexity and runtime were higher than the leaders. |

| Outlook | Achieved the best aggregate trade-off between performance and complexity, remaining robust across operating conditions. | An excellent result for PAC-style designs — with further complexity optimization, this approach could become a top performer. | With additional algorithmic and implementation optimization, these designs are well-positioned to close the complexity gap. |

Congratulations to all participants for their creativity, technical excellence, and contributions to the discussion of modern coding techniques!

Contest Committee

Henry D. Pfister, Gianluigi Liva, and Sebastian Cammerer